All Categories

Featured

Table of Contents

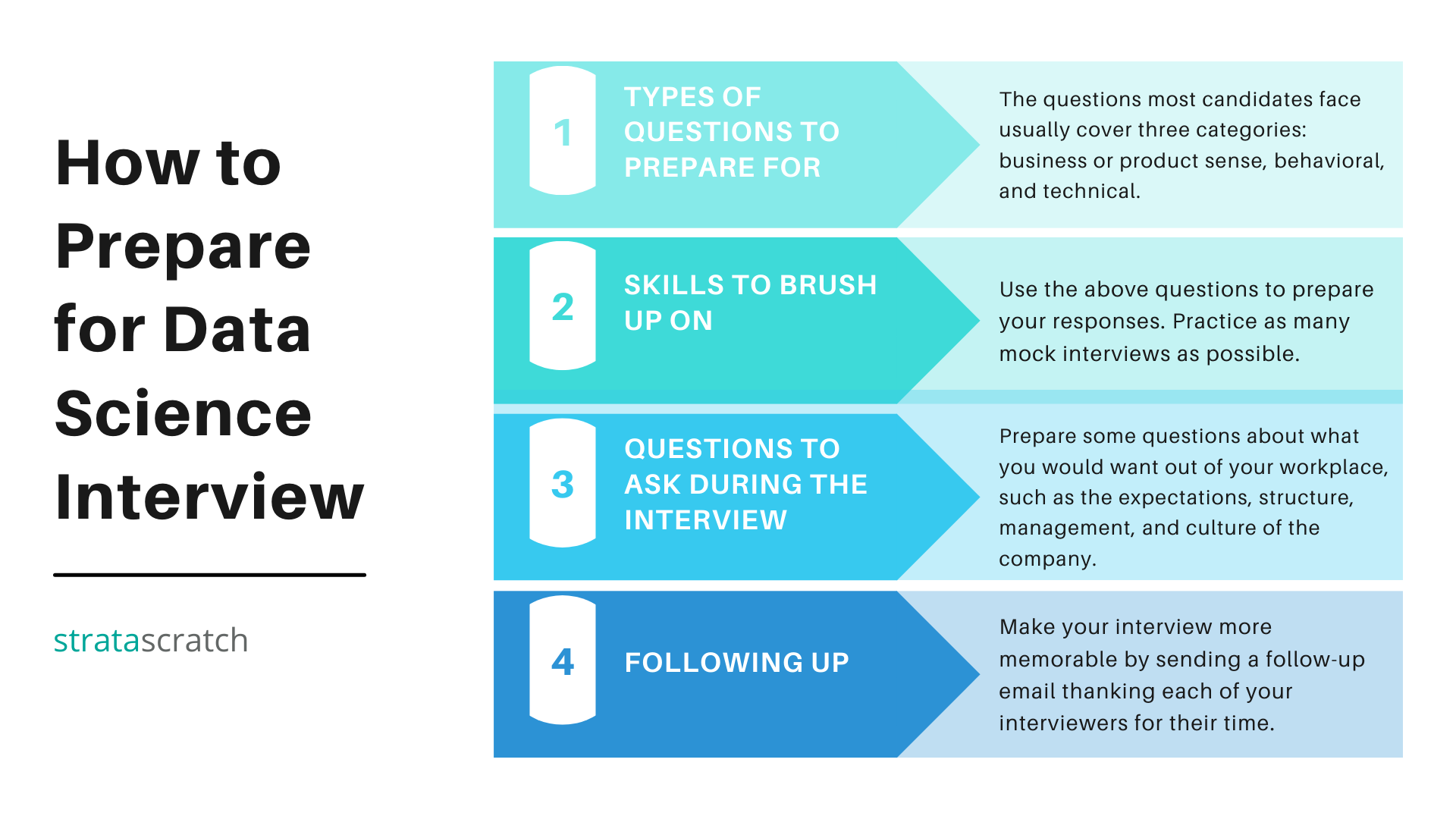

Amazon now commonly asks interviewees to code in an online paper data. This can vary; it might be on a physical whiteboard or a digital one. Talk to your employer what it will be and exercise it a whole lot. Currently that you recognize what questions to expect, let's concentrate on how to prepare.

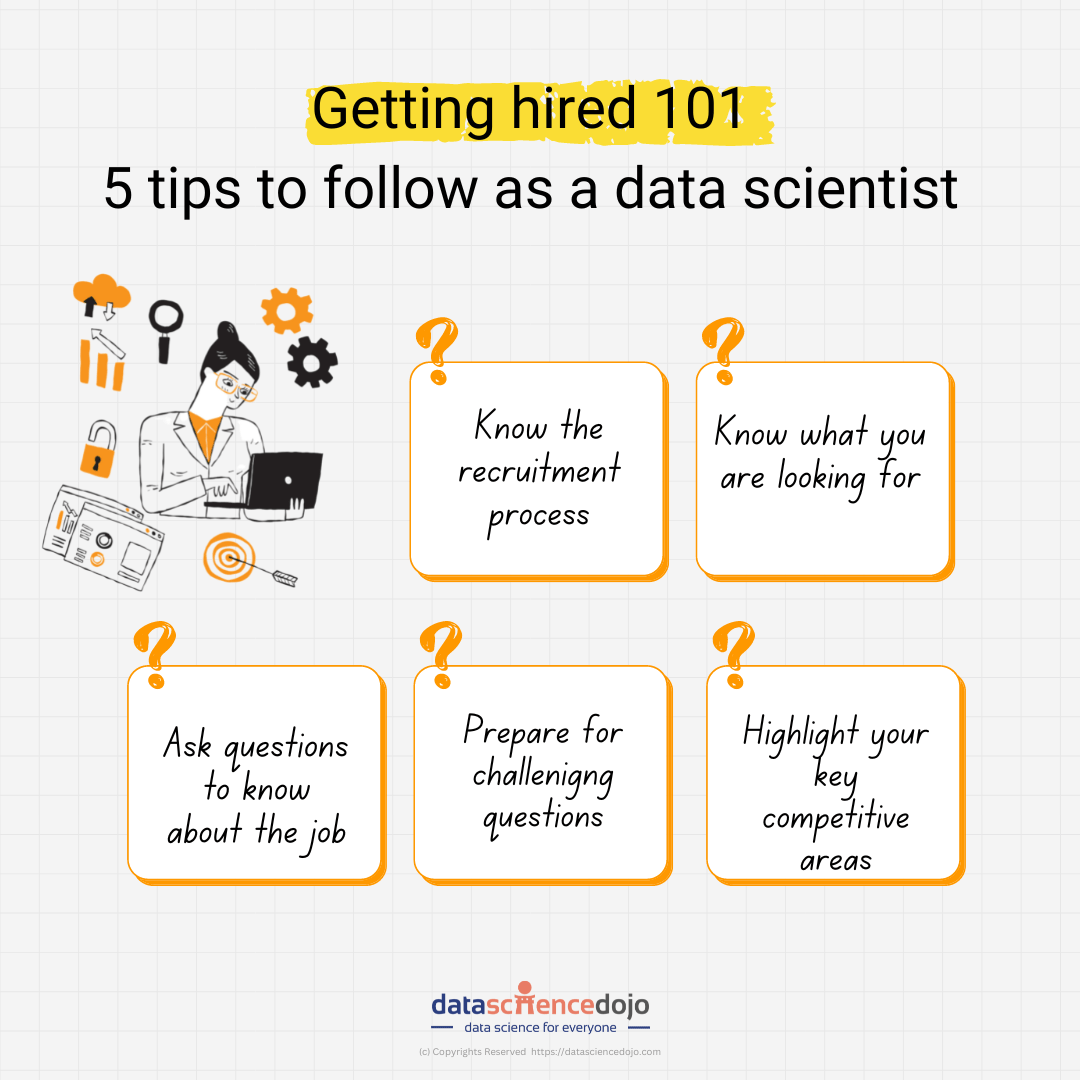

Below is our four-step prep plan for Amazon information scientist prospects. If you're planning for more companies than just Amazon, then inspect our basic information science interview preparation guide. The majority of candidates fail to do this. However prior to spending 10s of hours preparing for a meeting at Amazon, you need to take some time to see to it it's actually the right business for you.

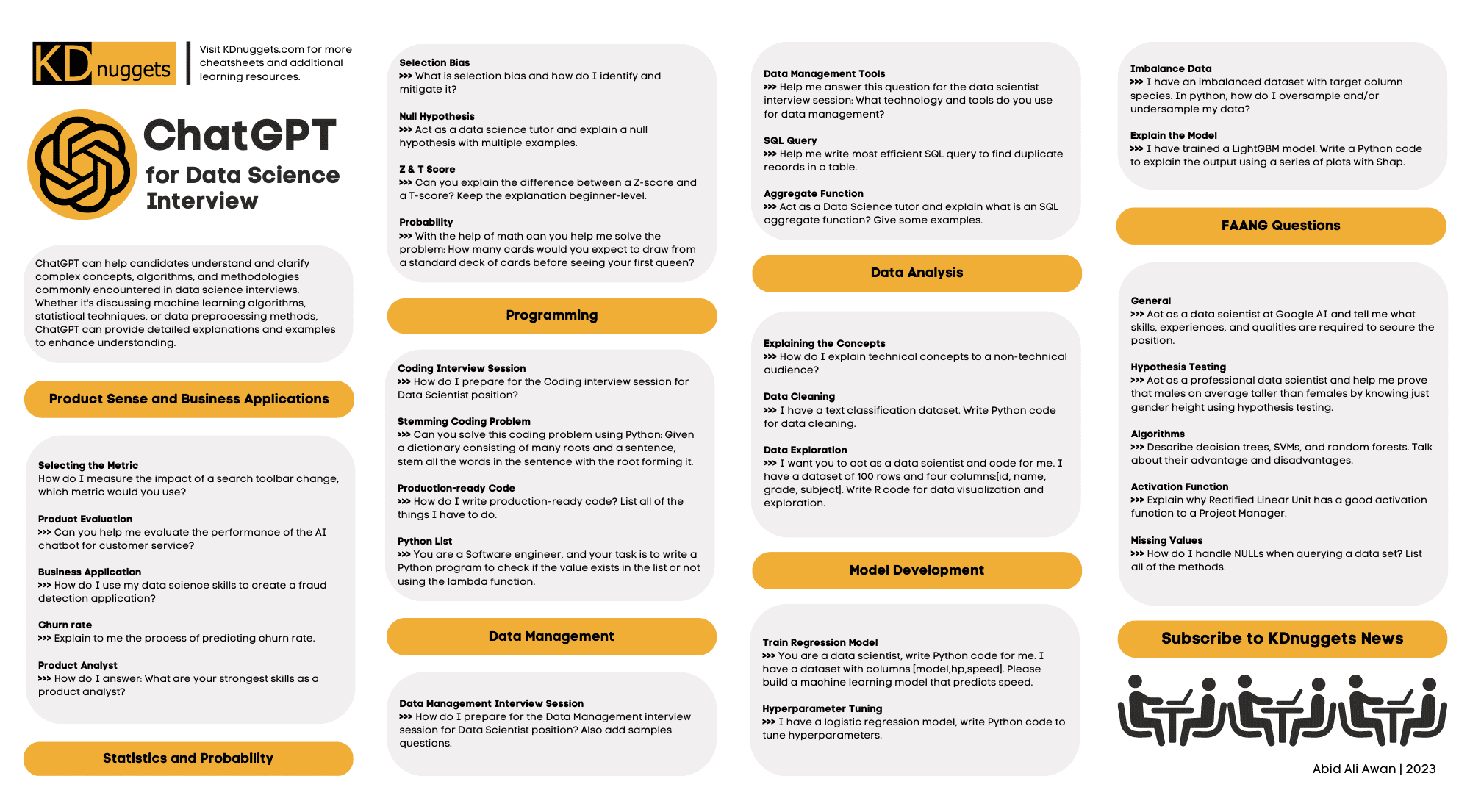

Practice the technique utilizing instance questions such as those in section 2.1, or those relative to coding-heavy Amazon placements (e.g. Amazon software program growth engineer interview guide). Also, method SQL and shows questions with medium and hard degree instances on LeetCode, HackerRank, or StrataScratch. Have a look at Amazon's technological subjects web page, which, although it's made around software program growth, need to provide you an idea of what they're keeping an eye out for.

Note that in the onsite rounds you'll likely have to code on a white boards without being able to implement it, so exercise creating through troubles on paper. Offers totally free courses around initial and intermediate maker discovering, as well as data cleansing, information visualization, SQL, and others.

Facebook Data Science Interview Preparation

Make certain you have at the very least one story or example for every of the concepts, from a wide variety of settings and tasks. Finally, an excellent means to practice all of these different kinds of concerns is to interview yourself out loud. This might seem unusual, yet it will dramatically enhance the method you communicate your solutions during a meeting.

One of the major difficulties of data scientist interviews at Amazon is interacting your various answers in a way that's easy to understand. As an outcome, we strongly suggest practicing with a peer interviewing you.

Be advised, as you might come up against the adhering to issues It's difficult to understand if the comments you get is precise. They're not likely to have expert expertise of interviews at your target company. On peer platforms, people typically squander your time by not showing up. For these factors, several prospects skip peer mock meetings and go directly to mock meetings with an expert.

How To Approach Machine Learning Case Studies

That's an ROI of 100x!.

Data Science is fairly a large and varied field. Because of this, it is actually hard to be a jack of all professions. Commonly, Data Science would certainly concentrate on maths, computer science and domain name expertise. While I will briefly cover some computer technology principles, the mass of this blog site will primarily cover the mathematical fundamentals one might either require to brush up on (or even take a whole training course).

While I understand the majority of you reading this are extra math heavy naturally, recognize the bulk of information scientific research (risk I claim 80%+) is gathering, cleaning and processing data into a beneficial kind. Python and R are one of the most preferred ones in the Data Science space. Nevertheless, I have additionally encountered C/C++, Java and Scala.

Key Data Science Interview Questions For Faang

It is common to see the majority of the information researchers being in one of 2 camps: Mathematicians and Data Source Architects. If you are the 2nd one, the blog will not help you much (YOU ARE ALREADY AWESOME!).

This could either be gathering sensing unit information, parsing sites or performing studies. After gathering the information, it needs to be transformed right into a usable kind (e.g. key-value shop in JSON Lines data). Once the data is gathered and placed in a functional style, it is vital to do some data high quality checks.

Tech Interview Preparation Plan

Nevertheless, in situations of fraud, it is really common to have heavy course discrepancy (e.g. just 2% of the dataset is real scams). Such info is very important to determine on the suitable choices for feature design, modelling and model analysis. For additional information, examine my blog on Fraud Discovery Under Extreme Course Discrepancy.

In bivariate analysis, each function is contrasted to other functions in the dataset. Scatter matrices enable us to discover concealed patterns such as- functions that need to be crafted together- functions that may require to be eliminated to stay clear of multicolinearityMulticollinearity is in fact a problem for multiple designs like linear regression and hence needs to be taken treatment of appropriately.

Think of making use of net use data. You will have YouTube customers going as high as Giga Bytes while Facebook Carrier users use a pair of Huge Bytes.

Another issue is the use of categorical values. While specific worths are typical in the information science globe, understand computers can only comprehend numbers.

Interviewbit For Data Science Practice

At times, having too numerous thin measurements will interfere with the efficiency of the version. An algorithm typically used for dimensionality decrease is Principal Elements Evaluation or PCA.

The typical groups and their sub classifications are described in this area. Filter techniques are usually used as a preprocessing action.

Typical methods under this group are Pearson's Relationship, Linear Discriminant Analysis, ANOVA and Chi-Square. In wrapper methods, we try to make use of a part of attributes and educate a design utilizing them. Based on the inferences that we attract from the previous version, we decide to add or remove functions from your subset.

How Data Science Bootcamps Prepare You For Interviews

Common techniques under this category are Ahead Selection, In Reverse Removal and Recursive Feature Elimination. LASSO and RIDGE are typical ones. The regularizations are offered in the equations listed below as referral: Lasso: Ridge: That being claimed, it is to recognize the technicians behind LASSO and RIDGE for meetings.

Not being watched Discovering is when the tags are not available. That being claimed,!!! This mistake is enough for the job interviewer to terminate the interview. One more noob mistake people make is not normalizing the functions before running the design.

Thus. Guideline of Thumb. Linear and Logistic Regression are one of the most basic and commonly made use of Artificial intelligence algorithms available. Before doing any analysis One usual interview blooper people make is beginning their analysis with an extra complicated design like Semantic network. No doubt, Neural Network is highly accurate. Nonetheless, benchmarks are vital.

Latest Posts

Software Engineering Interview Tips From Hiring Managers

What Are Faang Recruiters Looking For In Software Engineers?

How To Prepare For Data Science Interviews – Tips & Best Practices